Diffusion-based image generation

- Which software falls into this category?

Stable Diffusion by an institute at a German university, Midjourney, Dall-E2 by OpenAI (with links to Microsoft and Elon Musk), Imagen by Google and various spin-offs. In the past months, they have become “spectacularly” successful in capturing the public’s attention and imagination. - What are the key machine learning technologies called?

See the video by the University of Nottingham for a more technical explanation. This is just a list of the 3 main ingredients that all exponents seem to use:- The Latent Diffusion Model algorithm or architecture handles the removing of random noise in an image using neural networks training specifically to perform this task.

That noise is artificially added to avoid giving the same result every time a question is asked, and thus introduce an element of surprise. You can disable the randomness by controlling the algorithm’s “seed” number. - Embedding of textual input into the learning process, using for example the GPT·3 algorithm. This encourages the models to produce images matching the input text rather than just producing any image: after all, you can’t expect to get what you are looking for in a noisy image unless you tell the software what you are looking for.

- Classifier-free guidance more or less forces the models to produce images that match the input prompt. This is done by determining (subtracting) and increasing (multiplying) the effect of using GPT-3 and the textual prompt.

- The Latent Diffusion Model algorithm or architecture handles the removing of random noise in an image using neural networks training specifically to perform this task.

- Do they use Google Search to find images whenever an output image is requested?

No. Empirical evidence:

* If that were the case the result would change over time: Google Search keeps finding new information on the internet on a daily basis. For people who develop and test algorithms, stable reference data (“models”) is actually a benefit.

* And the technology would “know” roughly everything that Google has found. This is not the case: Google may contain captioned images that LDM’s smaller data set doesn’t know about. The data sets for LDM are smaller largely for practical reasons (compute cost, network load, compute time, and increasingly… copyrights).

* Some versions of LDM can run while your computer is offline. - Does LDM contain actual images (e.g. of a cat or a Picasso painting) that get included in the output?

The answer depends on your definition of what counts as an image. Stable Diffusion has been trained with about 600 million esthetically pleasing image<>text pairs. So these will include cats and paintings by Picasso (after all, both keywords give convincing results). But the algorithm doesn’t (for engineering reasons) run on images themselves (“in pixel space”), but in a heavily encoded space (“lower dimensional” or ”latent space”). Explaining how the processing in such algorithms works is nonintuitive (see link) because it works very differently from how humans would perform this task.

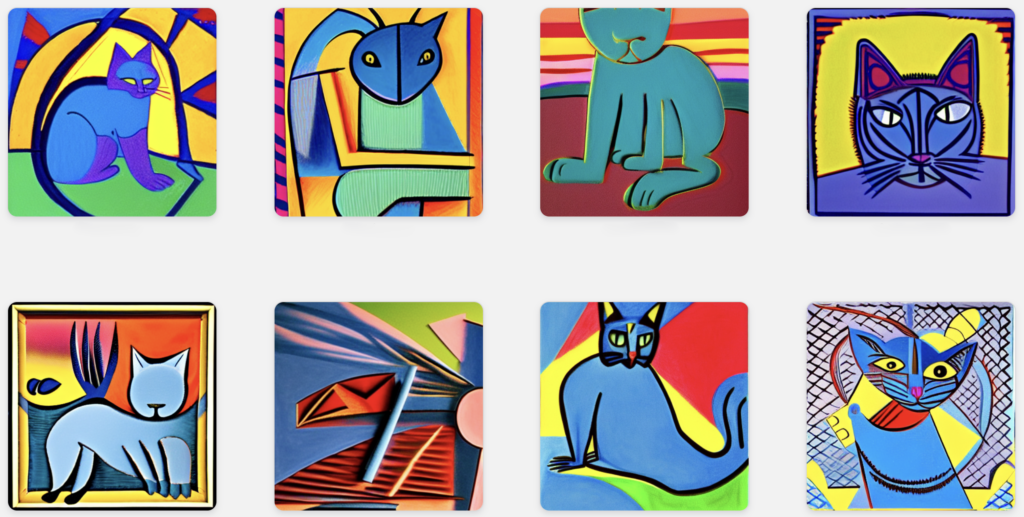

So you could argue that the model knows how to detect (and thus generate!) cats, but certainly does not generate cat images in a straightforward way using the cat images submitted during training. To generate a blue cat, the model thus has a learned knowledge of “blueness” and “catness” and can combine both. In contrast, a non-artist would first tackle catness and then blueness: make a suitable cat image (the hard part) and then turn it blue using some algorithm (say in Photoshop) that only changes the coloring. Or a painter might first mix appropriate blue colors, and then start painting a cat. The LDM algorithms, in contrast, do not target goals like “blueness”, “catness” and “in the style of Picasso” (and a lot more) in a planned sequence.

(DiffusionBee, stand-alone implementation of Stable Diffusion for MacOS)

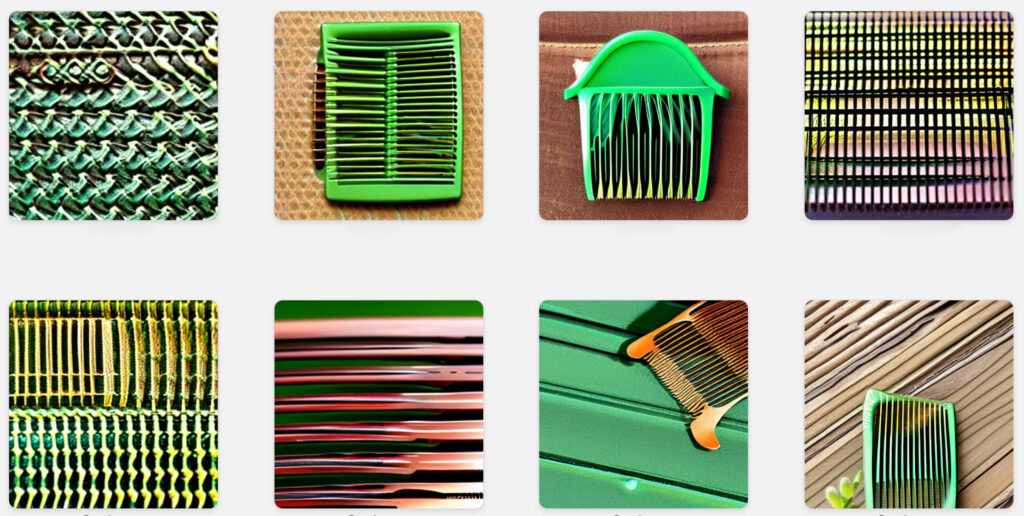

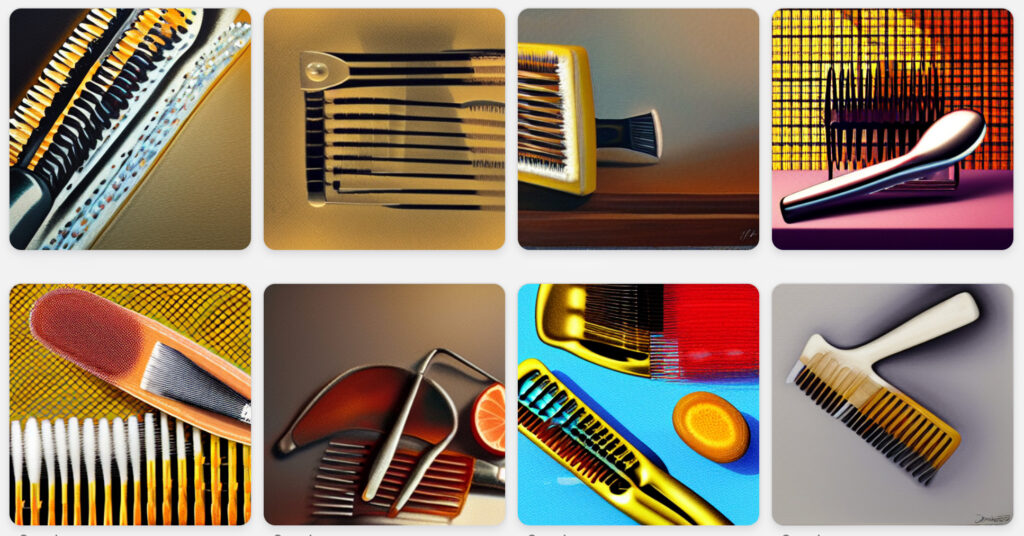

- If this software generates a picture of a comb, is that based on one single input image of a comb, an average of all available comb images, or something way more complex?

Assuming the model’s training set contains many comb images, it would be weird and risky to just pick one: it would waste information that could be obtained from the other examples of combs. But how do you generate a typical comb assuming you don’t have a dedicated parameterized model of all alternative manufacturable combs? To the software, comb is just a word (“label”), and it was never even told what part of the image corresponds to “comb”. So you might picture the software having learned that on images labelled with ”comb”, there is generally a series of longish parallel lines (see output picture). But in reality, the software doesn’t know “long” or “parallel” either and instead came up with its own set of quantities (“parameters”) that fit its transformation models.

- Does LDM contain codified knowledge like what human hands look like in 3D or a model of how reflective surfaces work?

No. The facts that a human hand has 5 fingers, that left and right hands are similar but different, and rules to put the right hand on the correct arm are not – as such – fed into the system. The system can more or less learn such things by being fed proper examples, and by being rewarded/punished during the learning process. This doesn’t mean that it gets hands right most of the time (in fact, this is often clearly wrong), but shows why it is not impossible. - How does LDM get perspectives (more or less) right?

I suspect that there is, again, no specific model about geometry or vanishing points. It just leans to get perspective somewhat right based on correct examples, and getting rewarded during the learning process if it gets it more or less right. - How does LDM get lighting (more or less) consistent across an image?

Same answer as question on perspective, I think. But on the other hand, there may always be some extra layer in the processing architecture or the learning process that was put there to stress a specific criterium that users (subconsciously) consider important. - How much computation is done beforehand (“training”) and how much per task?

Training the model on 600 million images, each with captions, takes a lot of computation. In one case it took 256 high-end graphics cards computing collectively almost 1 month to process all these images. But the output model is highly reusable. In contrast, converting a single text to an image using this big model takes say 1 minute per image on a local machine – but it only results one possible answer to one request. - Does this require a graphics processor?

In practice, a GPU is needed for speed. In theory, any computer (with enough memory) will get the job done… eventually. Typical minimal requirements are an Nvidia RTX class graphics card with 8-16 GB of (video, VRAM) memory. Or an Apple M1 or M2 class computer with 8+ GB of (unified) memory. Obviously all this depends on the size of the model that has been pre-trained, on the image size, number of de-noising steps, and your patience. - Does this require special machine learning cores (like Apple has)?

There are ports of the Stable Diffusion software that can make use of the ML cores. In the case of Apple, applications can indicate a preference of having code run on an ML core rather than on a GPU core, but the decision is actually made by the operating system. Measured performance differences are not very significant, but the question remains whether the tester is certain that the ML hardware was being used (they may have only enabled the potential use of the ML cores).